AI has only been around for a short amount of time but it already feels like we, as a species, are leaning on it way too heavily and way too soon.

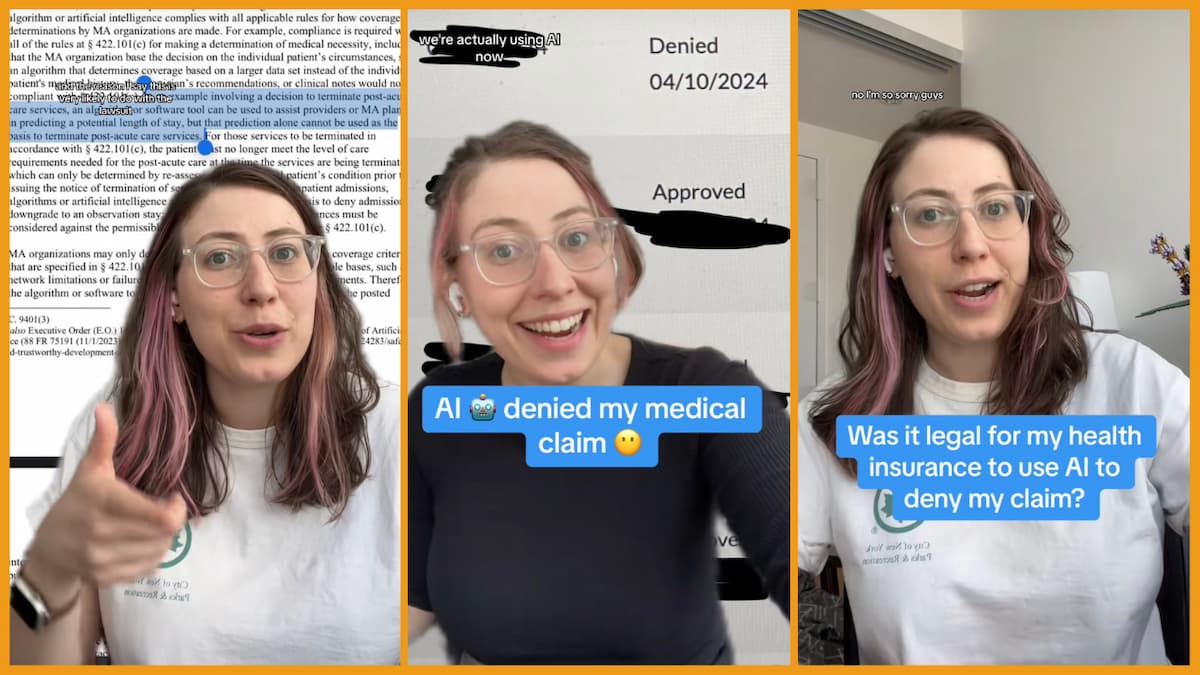

As cool and useful as AI can be, it can also be a massive pain to deal with, as one woman found out after attempting to file a claim on her insurance. The experience was shared to TikTok by Alberta who vented her frustrating experience with an artificial intelligence that rejected her claim despite there being no problems with the same claim in the past, you know, when actual humans were dealing with this stuff.

Alberta clarifies that the claim wasn’t for anything controversial, “it was my annual physical. which I think Obamacare made it so you quite literally have to cover the annual physical.” Quick side note, while many people are under the impression that Obamacare/the ACA does this, that’s not entirely true as this article from the L.A. Times explains.

We know that the insurance has paid for her annual physicals before though, so it sounds like that that should be included for Alberta in this case; but not according to the AI. To make matters worse the robot doesn’t even offer Alberta an explanation as to why it rejected her and the humans couldn’t offer any help either.

The insurance company tried to explain that the AI’s decision could not be undone, but that it might “change its mind.” Yeah. Apparently this thing that is essentially a string of code has been known to just change its mind, like it gets some sick kick out of toying with people.

Insurance companies sucked already; now we can safely say that they suck even more. The comments responding to the video were appalled; some called for a lawsuit against the company, some wanted the company named and shamed, but all were in agreement that AI should not have the power to decide these sorts of things.

“Please sue your insurance company, seriously contact a lawyer”

“what insurance is this bc i need to know to stay away from it”

“i read a paper about machine learning fairness…basically, ai is not ready to fairly judge these things”

Alberta did make a follow-up video in which she explained that she didn’t want to be involved in a lawsuit, but did talk about two insurance companies, Humana and United Healthcare, who were facing an open lawsuit. According to an article from CBS News, “roughly 90% of the tools denials on coverage were faulty.”

This whole thing is just so frustrating to hear. Should AI really be used for things like this if it’s not 100% incapable of making a mistake? If AI can reject a claim like this for no reason then can we not assume that it could also reject life-saving treatment for someone because it just didn’t feel like approving it on that particular day?

Sorry if I sound like a technophobe. It’s because I am.